This is a security data lake

Understanding these simple data architecture concepts can help your SOC succeed

The prospect of scale, savings and analytics have brought security data lakes into the limelight. But there are some pitfalls to watch for, and questions to ask, when evaluating storage options beyond what comes with your SIEM. There’s also exciting innovation to track as the cloud data space continues to evolve.

What is a data lake

The most important thing to know about data lakes in the cloud, is that they are based on something called “object storage”. AWS S3 is the best known object storage service, and Azure calls theirs Blob Storage. These services are like a massive, virtual filing cabinet in the sky. Each piece of data, whether it's a photo, a document, or a video, is stored as an "object."

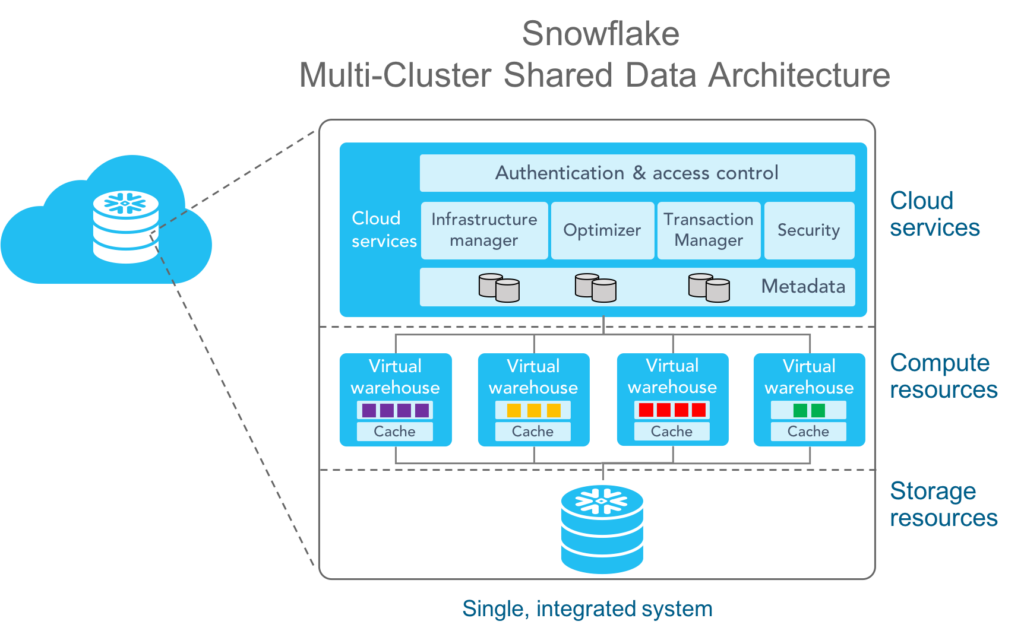

Object storage services like S3 hold exabytes of data, and with the proper permissions you can upload and download virtually unlimited gobs of information. What you can’t do, is read those files. At least without using another service. That’s what they mean at Snowflake when they talk about separating storage from compute: they use one service for storing data in files (AWS S3) and a different service (AWS EC2) for working with data from those files.

The separation of storage from compute is consistent across data lake solutions, including AWS Security Lake. The diagram below shows S3 as the storage piece and separate services like Amazon Athena and OpenSearch for querying.

Data lakes in the cloud are low maintenance, reliable and flexible. Another key advantage is that they can serve for multiple use cases and not just log search. For example, an enterprise might use the same data lake for reporting last quarter’s sales figures (BI use case) as for predicting future trends (data science use case). Security teams should keep this aspect in mind when evaluating data platform options.

What is not a data lake

Let’s try applying our newfound data lake expertise to some popular log management solutions that sometimes get mistaken for data lakes. We’ll see that understanding the internal architecture of these platforms will help us to anticipate their limitations.

For example, Elasticsearch is a popular solution for storing log data. But does running Elasticsearch in AWS give us a data lake? Here’s why it doesn’t: Elasticsearch storage is tightly coupled with compute.

The diagram below shows a three node Elasticsearch cluster, where each node (a physical or virtual server) stores several shards of data. If we wanted to add more storage to this cluster, we would soon have to add more nodes.

The tight coupling of storage and compute is reflected in the AWS guide for Elasticsearch deployment. Even though the deployment is all virtual and cloudy, the storage piece is attached to the virtual machine instance. Check out the volume type (General Purpose Solid State Drive) and how “Delete on Termination” is checked, because there’s an expectation that you don’t want your virtual disks hanging around orphaned from the virtual machine.

Taking the time to understand the relationship between storage and compute is critical for a scalable security architecture. A smart question to ask when evaluating solutions might be: “Will our data reside in storage volumes or in an object store?

Splunk is another popular log management solution that generally uses disk storage when deployed on-premises or in the cloud. As described in “Building scalable AWS-based Splunk Architectures”, deploying Splunk in production requires you to “Optimize Instance EC2 and Storage size for your needs” and selecting which EBS storage volume type should be attached to your virtual machines. Those are all terms for virtual machines and their disks. Notice in the diagram below how S3 is used: for backing up snapshots, not for active searching.

This tight coupling between storage and compute is why Splunk Cloud defaults to 90 days and doesn’t list the cost for going beyond that. Having an archive tier that requires restoring data is another sign that the architecture couples storage and compute.

Many of the challenges around effective threat detection and response are symptoms of an architecture where storage and compute are tightly coupled. High up-front ingest costs, retention constraints and storage tier management can all be addressed through a data lake approach. But on its own, that data lake is not going to be very useful for the SOC.

What makes it a security data lake

My own security data lake journey involved hiring security engineers to build all of the components around the data platform. We created a rules engine in Python and wrote all of our detections in SQL from scratch. It took over a year to complete and I was fortunate to have access to those rare birds that can code, threat model and investigate alerts.

Luckily, the vendor landscape has changed a lot in the past five years. There’s now a vibrant ecosystem of providers ready to turn your company’s existing cloud data platform into a security data lake. Off-the-shelf solutions can provide integrations, rules engine, detection content, investigation interfaces and automation options. I’ve seen dozens of Snowflake customers go from concept to production without hiring a single developer—proving that security data lakes have become accessible outside the very largest enterprises for the first time.

The rules engine is where a security team applies their expertise to the data lake. Without it, the data lake is just a glorified backup repository. And the rules engines that are available to turn Snowflake, in particular, into a security data lake have matured significantly in recent years. For example, at Anvilogic we have a rules engine that works at two levels: one level of logic to turn logs into events of interests, and one to combine events of interest into threat scenarios. There is also versioning, an AI copilot, and other features that operationalize the data lake for threat detection and hunting.

Make sure when evaluating security data lake options that you’re being given direct access to the data platform. A security data lake should support any kind of data you want to load- from logs to asset inventory, vulnerability findings and identity details. While your initial security use case may be search-oriented, your data lake should still support reporting, data science and machine learning without having to move the data around. That way your security team is not missing out on all the innovation happening around LLMs and self-service analytics. Use cases that are considered cutting edge today will be table-stakes soon enough, so design your architecture accordingly.

In summary, a modern security data lake has the following properties:

Storage is based on an object store (whether directly in S3 or transparently as in Snowflake)

Queries use compute that’s separate from storage and available on-demand

Flexible analytics options are supported, including enterprise dashboards and data science tools

A rules engine designed for security use cases is plugged into the data lake

Winter is coming: Apache Iceberg

A major development is underway that I expect will give security data lakes a further boost in price/performance and adoption. An open table format, initially developed at Netflix, is being embraced by Google, AWS, Snowflake, Databricks and pretty much everyone else. Iceberg provides a standard for tracking metadata within your data lake so that files aren’t just hanging around—any compatible query engine has a kind of map to find what the user is searching for. In essence, Iceberg enables performance and governance without having to move cloud-based data out of its source.

For a SOC that moves around terabytes of log data in their cloud environment, this approach may soon enable more savings on infrastructure costs while improving flexibility in how the data is queried. For example, Iceberg-formatted data in S3 may be queried by one team using Spark and another team using Snowflake. The pace of data lake innovation is unlike anything we’re used to in the cybersecurity industry. Security organizations that handle data at scale shouldn’t wait any longer to dive in.