Busting Four Myths on Usage-Based Pricing for Security Operations

Why the best cost model for security data is measured by the second

Usage-based pricing for security operations is about as popular as trepanning for curing headaches. But does the “pay for what you use” model deserve a closer look? This post will highlight four concerns I’ve heard from security leaders and why SOCs should embrace the cost model that’s helped unleash data-driven transformations across the enterprise.

What is Usage-Based Pricing?

When data platforms ran on-premises, physical servers were deployed based on an expectation of how much compute power would be needed to keep users happy. In off-hours, the utilization of those resources was low, and when things got busy, the available resources were maxed out. At that point, searches might slow down or wait in a queue. This fixed-capacity model is still the case for virtually all cloud-based SIEM deployments.

One of the breakthroughs that Snowflake brought to data platforms was how it took advantage of the elasticity of the cloud. Users could tap into a virtually limitless pool of compute resources when needed, and “give back” resources when idle. The technology to make fully elastic scaling easy and instant was groundbreaking. The pricing model would need to be innovative as well.

Snowflake created a pricing model where users query their data with a virtual warehouse that has a T-shirt size. These sizes range from X-Small to 6X-Large (that’s XXXXXX-Large), with each size twice as big and twice the cost. The total cost is calculated by the number of seconds for which the warehouse is active. So a Medium warehouse that runs for 84 seconds costs the organization 0.0011 x 84 = 0.0924 credits. These warehouses can automatically suspend and resume based on query activity, hence the term usage-based pricing. But is this a good fit for security operations?

Myth: My Team Would Cut Back on Investigations

When learning about usage-based pricing, some security leaders worry about analysts feeling pressured to cut short investigations. No one wants to adopt a new pricing model only to miss threats in the environment. In my work with dozens of security teams that have adopted data lakes, I have yet to see this happen.

The chart below shows why analysts don’t hold back under usage-based models. Compared to a traditional data warehouse or SIEM with fixed resources, a cloud data platform that elastically scales to meet demand is radically more cost-effective. During idle hours, the cost difference is substantial. In times of peak activity, the cost may be comparable. So while analyst activity may affect the degree to which savings are realized, cost savings are still achieved over time. Security analysts don’t need to worry if the organization is saving 80% over alternative approaches or “only” 50%. Properly informed during onboarding, analysts do not work differently on account of usage-based pricing.

Myth: We’d Get Surprise Bills

Many SIEMs get a bad wrap for ingest-based pricing and its effect on detection coverage and IR. But at least they let you control which sources ship data, and tune down sensors that are “too good” at generating visibility. There’s a fear that in periods of heightened SOC activity, usage-based bills could become a nasty surprise at the end of the month.

The truth is that some forms of usage-based pricing do run the risk of spiky, even unexpectedly high, costs. Data platforms that charge by “bytes scanned” have been responsible for unpleasant CFO conversations for at least one SOC leader I worked with. In their case, it was the AWS Athena service where the IR team ran a sweep that touched months-worth of log data. As a result, a single investigation cost them over $5,000. good thing it was a false alarm right?

It’s unfair and counterproductive to expect SOC analysts to predetermine how many terabytes each search will scan. At scale, this is a recipe for disaster. In fact, AWS recently introduced a “provisioned capacity” pricing model for Athena where customers get access to always-on resources without per-bytes pricing. While not exactly usage-based, AWS explained that the change was prompted by customers reporting that “it is difficult to forecast [the] Athena costs. Athena charges by the volume of data scanned, which is often difficult to predict as it depends on the size of your data set, the construction of the user queries, and the storage format for the data.”

The time-based usage model does not carry the same risk. There are only 24 hours in a day, and query timeout enforcement can automatically prevent runaway queries. I’ve seen cases where incident responders temporarily request beefier warehouses to meet their SLAs— but resizing can be subject to management approval where cost/latency tradeoffs can be considered. Sizing can later be readjusted as the situation permits.

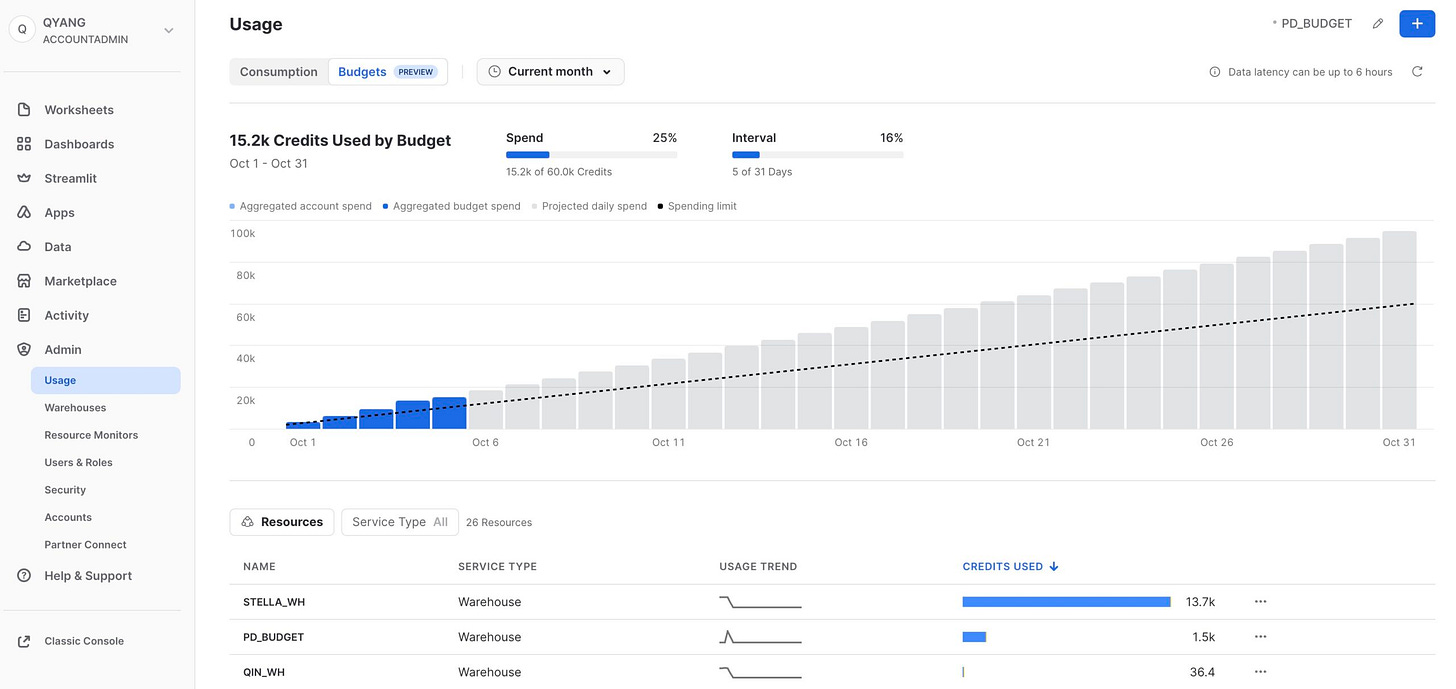

Across all of these options, Snowflake has released a slew of features to monitor costs and prevent overruns. Budgets, generally available as of last week, enable teams to set soft and hard limits to track, notify and adjust resources as needed to stay within preset budget guardrails. SOC teams can receive early warning when they are on track to blow through their weekly or monthly budgets and adjust whatever changes have taken their spending off course.

Myth: Low-Latency Threat Detection and Usage-Based Pricing Don’t Mix

Another concern I’ve heard from SOC leaders considering a move to usage-based pricing for their data platform is around detection latency. We need to run threat detection rules around the clock, they explain, and that would mean non-stop usage. Wouldn’t that break the usage-based pricing model?

Not necessarily. This is where “separation of compute from compute” comes into play. We can take advantage of Snowflake’s support for querying a database with multiple warehouses in a single account. There’s no limit to how many warehouses can be created, and each only incurs cost when it is active.

Security operations on the data lake can therefore divide its compute requirements across several warehouses. A small, low-cost warehouse can be running 24x7 against recent data for timely threat detection. In parallel, a much more powerful one can be standing by for ad hoc investigations. When a human analyst needs to search log data going back weeks or months, the large warehouse can return answers quickly and suspend itself within minutes of inuse.

Having multiple compute clusters available, each with its own balance of cost and performance, enables security operations with low-latency use cases to benefit from usage-based pricing. Snowflake’s product direction towards services that aren’t tied to running warehouses, like Snowpipe for ingest and serverless tasks for transformations, means ever-increasing granularity aligning each use case’s cost to its latency requirements.

Myth: The Benefits Aren’t Worth the Added Complexity

While leading cloud data platforms enable security leaders to save millions on detection and response, adoption has been gradual. Fears around the complexity of usage-based cost models may have played a role in that. The reality is that managing usage-based costs is easier than ever, but you don’t need to take my word for it.

One benefit of the “pay for what you use” model is how it supports experimentation. A team can try a usage-based platform without any up-front commitment and get first-hand experience with sizing resources, per-second metering, and cost guardrails. With Snowflake, for example, an account can be provisioned with a maximum budget of a few hundred dollars. The team can then freely experiment with querying data using warehouses of different sizes and know in advance exactly how much the experiment will cost. (Side note: you can get a good amount of free credits for testing out a security data lake but you didn’t hear it from me. I don’t even work there anymore.)

Every data platform has a fixed relationship between cost and performance. For most SIEMs, that relationship is hidden from users. The vendor charges you for ingest or licenses based on how much search activity they expect you to perform, and they keep infrastructure costs in check by throttling your speed. Even with SIEMs that offer some level of control over your deployment’s compute power, it’s usually so cumbersome to scale up and down that the SOC is compelled to operate at a fixed level of compute power.

Usage-based pricing blasts this model apart by removing the SIEM vendor’s “safe” assumptions for what the SOC will need to do its job. Customers get to align their spend to actual requirements, and in cybersecurity this translates to substantial savings when unused resources don’t incur costs. It also fosters better customer support, as Snowflake CRO Chris Degnan explained in Consumption-based Pricing: Ensuring Every Customer’s Value and Success, “Your account team becomes your day-to-day advocate and an integral part of your project team, working hard to earn your business every day.”

Perhaps most importantly, it removes the hard caps on performance that otherwise create search-related coffee breaks. When the SOC goes to Defcon 1 (that’s nuclear war, not the event in Vegas), the option to temporarily 10x search performance is something that even hard-nosed CFOs can get behind.

For all of these reasons, the move from fixed or ingest-based SIEM pricing to a usage-based model should be considered a sign of SOC maturity. While most SIEMs don’t yet support usage-based data platform options, some do. Security leaders should bear in mind that some preparation and best practices are needed for such a shift. But the flexibility to pay for the time and performance your team needs can do wonders for your budget while helping to detect and respond to threats when seconds count.